Comprehensive coding guide for creating advanced rotary multi-agent workflows with Microsoft Autogen

In this tutorial, we demonstrate how Microsoft’s Autogen Framework enables developers to coordinate complex multi-agent workflows with minimal code. By leveraging Autogen’s RoundrobgroupChat and TeamTool abstracts, you can seamlessly assemble expert assistants such as researchers, Factcheckers, critics, abstracts and editors to become a cohesive “deep water” tool. Autogen handles the complexity of twists, termination conditions and flow output, allowing you to focus on defining the expertise and system prompts for each agent instead of placing the water pipes together, rather than chaining callbacks or manual prompts together. Whether it’s in-depth research, validating facts, perfecting prose or integrating third-party tools, Autogen provides a unified API that extends from simple two-way pipelines to detailed, five-agent collaboration.

!pip install -q autogen-agentchat[gemini] autogen-ext[openai] nest_asyncioWe patched the notebook event loop with Gemini support, API compatibility OpenAI extension and Nest_Asyncio library to ensure you have all the components in COLAB that runs asynchronous, multi-agent workflows.

import os, nest_asyncio

from getpass import getpass

nest_asyncio.apply()

os.environ["GEMINI_API_KEY"] = getpass("Enter your Gemini API key: ")We import and apply nest_asyncio to enable nested event loops in our notebook environment, then use getPass security prompts your Gemini API keys and store them in OS.Environ for access by authenticated model clients.

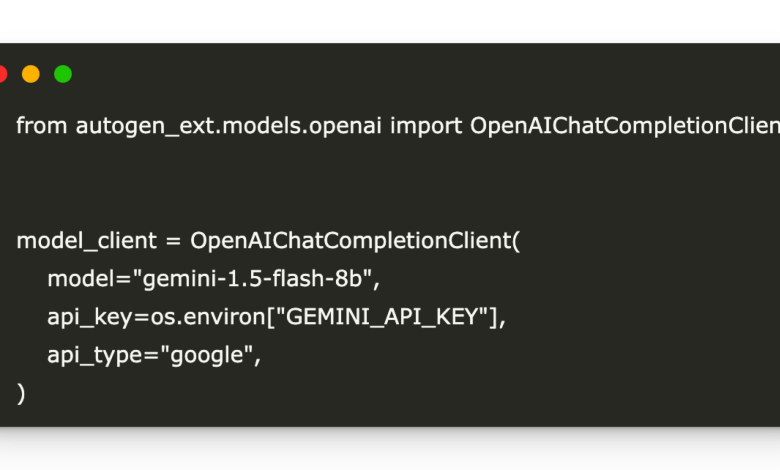

from autogen_ext.models.openai import OpenAIChatCompletionClient

model_client = OpenAIChatCompletionClient(

model="gemini-1.5-flash-8b",

api_key=os.environ["GEMINI_API_KEY"],

api_type="google",

)

We provide you with an upcoming Model_Client for the downstream Autogen Agent by specifying the GEMINI-1.5-FLASH-8B model, injecting the Gemini API key for your storage, and setting API_TYPE=”Google”, thus giving you a model_Client that will work for the downstream Autogen Autogen proxy, we initialized an OpenAI access client pointing to Google’s Gemini, which points to Google’s Gemini.

from autogen_agentchat.agents import AssistantAgent

researcher = AssistantAgent(name="Researcher", system_message="Gather and summarize factual info.", model_client=model_client)

factchecker = AssistantAgent(name="FactChecker", system_message="Verify facts and cite sources.", model_client=model_client)

critic = AssistantAgent(name="Critic", system_message="Critique clarity and logic.", model_client=model_client)

summarizer = AssistantAgent(name="Summarizer",system_message="Condense into a brief executive summary.", model_client=model_client)

editor = AssistantAgent(name="Editor", system_message="Polish language and signal APPROVED when done.", model_client=model_client)We define five professional assistant agents, researchers, Factcheckers, critics, digesters, and editors, each initialized with role-specific system information and a shared Gemini-driven model client, enabling them to separately collect information, verify accuracy, criticize content, condense content, condense summary and polish language in automated workflows.

from autogen_agentchat.teams import RoundRobinGroupChat

from autogen_agentchat.conditions import MaxMessageTermination, TextMentionTermination

max_msgs = MaxMessageTermination(max_messages=20)

text_term = TextMentionTermination(text="APPROVED", sources=["Editor"])

termination = max_msgs | text_term

team = RoundRobinGroupChat(

participants=[researcher, factchecker, critic, summarizer, editor],

termination_condition=termination

)

We’ll take the RoundrobgroupChat class with two termination conditions and then form a stop rule that is emitted after a total of 20 messages or when the editing agent mentions “approval”. Finally, it instantiates the five professional agents rotation teams with a combined termination logic that enables them to pass the research, fact check, criticism, summary and editing cycle until one of the stop conditions is met.

from autogen_agentchat.tools import TeamTool

deepdive_tool = TeamTool(team=team, name="DeepDive", description="Collaborative multi-agent deep dive")We wrap the RoundrobgroupChat team in a team called “DeepDive” with a readable description that effectively wraps the entire multi-agent workflow into a single summonable tool that other agents can call seamlessly.

host = AssistantAgent(

name="Host",

model_client=model_client,

tools=[deepdive_tool],

system_message="You have access to a DeepDive tool for in-depth research."

)

We created a “host” assistant agent configured with Model_client powered by a shared Gemini, granting it the Deepwater Team tool for coordinating in-depth research, and providing it with system messages to start it to inform it of its ability to invoke multi-agent deep workflows.

import asyncio

async def run_deepdive(topic: str):

result = await host.run(task=f"Deep dive on: {topic}")

print("🔍 DeepDive result:n", result)

await model_client.close()

topic = "Impacts of Model Context Protocl on Agentic AI"

loop = asyncio.get_event_loop()

loop.run_until_complete(run_deepdive(topic))

Finally, we define an asynchronous run_deepdive function that tells the host to execute the DeepDive Team tool on a given topic, print the full result, and then close the model client. It then grabs Colab’s existing Asyncio loop and runs Coroutine completion to complete seamless synchronous execution.

In short, bringing Google Gemini together through Autogen’s OpenAI stable client and wrapping our multi-agent team into a summonable TeamTool provides us with a powerful template for building highly modular and reusable workflows. Autogen removes event loop management (with NEST_ASYNCIO), stream response and termination logic, allowing us to iterate quickly through proxy roles and overall orchestration. This advanced model simplifies the development of collaborative AI systems and lays the foundation for scaling to retrieval pipelines, dynamic selectors, or conditional execution strategies.

Check out the notebook here. All credits for this study are to the researchers on the project. Also, please feel free to follow us twitter And don’t forget to join us 95k+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.