Claude Predviéa Cijene Ethereuma, Cardana I XRP-A ZA 2025. godinu

Napredni AI chatbot Claude IZ TVRTKE Human predviđada kriptothousthe ove ovegodinečekavrlo povoljno razdoblje. zahvaljućieksplozivnom rastu bitcoina koji je 22. svibnja dosegnuo cijenu od 111.814 DolaraMeowu Investitorima ponovno rasteuzbuéenjeokoMogućee Nove Digitalne Zlatne Groznice.

Claude aističeNekolikoVodećihaltcoina koji bi moglizabilježiti značajanrast u cijeni. ovaoputističnaPrognozatemelji se na kombinaciji TehničkihAnaliza,,,,, karakteristika projekata,,,,, Razvoja U Industriji I makroekonomskih faktora.

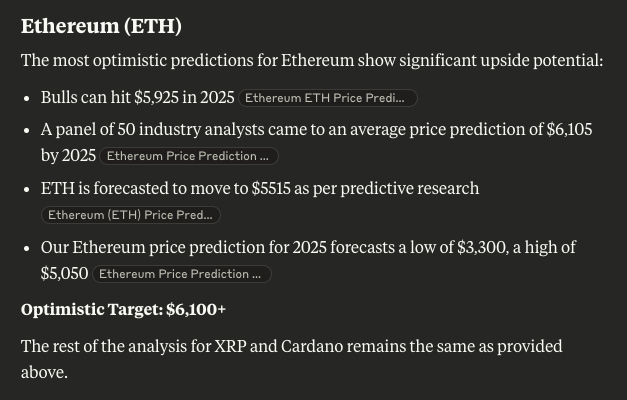

Ethereum (ETH): Claudepredviđ

Ethereum (ETH) I dalje jeključnisut chain ekosustava. OD Svog Lansiranja2015. Godine, Izrastao Jeu duguunajvećukriptovalut na svijetu, stržišnom kapitalizacijom koja sadapremašuje 306 Milijardi Dolara.

snaga ethereumaležiu njegovoj Svestranosti pametnih ugovorakojačinitemelj Exentraliziranih Financija (DEFI). tenutno je na ethereum platform 60 Milijardi Dolara (TVL – Total value locked).

Claude Predviopubističan Program 6.500 dolara do kraja godineŠTOBI PETSTAVLJALO OKO 2,6PutaVećirast u odnosu na tenutnu cijenu odpribližno2.515 dolara.

OVU PRONGOZUPODRžavaVišeFaktora. Ethereum Ima čvrstupoziciju u web3 infrastrukturištomu osigurava stablenost itržišniutjecaj koji rijetko koja druga kriptovaluttamožedostići.

pored toga, sveveće Instituted NoprihvaćanjeOsobito Kroz Ethereum etf-oveOtvara Vrata Tradicionalnim Financijama (Tradfi) Za Ulaganje U Eth Kroz Regulirane Kanale.

JošJedanpozitivan poticaj mogaobićiako Donald Trump Ispuni Najavu ODonošenjusveobuhvatnog Regulatornog okvira za kripto u sad-u. Takva Promjena Politike Mogla Bi Potaknuti posljednji Val rasta rasta prijenegošto Kripto kripto postane u potpunostiprihvaćen Misu Masama.

Takoéer, PrimjećujeSE povratak aktivnostivelikihulagača (Whalea). Nakon Višemjesečne Konsolidacijei Formiranja obrasca “Falling Wedge”, Ethereum JeCočetkom Travnja Započeo Poororavak. Dana7. SvibnjaZabilježenJe Skok s 1.800 NA 2.412 Dolaraštoukazuje na Obnovljeno povjerenje Velikih Investitora.

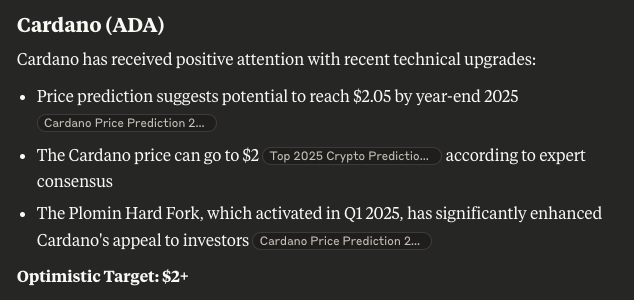

Cardano (ADA): utemeljeni konkurent ethereumu dobiva na zamahu

Cardano (ADA) ponovnoprivlačipažnjujavnosti, dijelomzahvaljućinimnedavnimpolitičkimizjavama. predsjednik Donald Trump Je spomenuomogućnostda ada ada budeuključenau Nacionalne Kripto Rezerve – Aliisključivokao immovina koja bi se „držala”, ne kupovala, već Dobivala Putem Zapljena.

Osnovan OD Strane Charlesa Hoskinsonabivšegsuosnivačaethereuma, cardanosetičesvojim IstraživačkimPristupom razvojus naglaskom na skalabilnost idugoročnuOdrživost. projectt se temelji na sručnorecenziranim inovacijama i formally verifikacijištoga izdvaja od mnogih konkurenata.

s tenutnomtržišnomkapitalizacijom od 23,9 Milijardi DolaraCardano Je Stabilan Suparnik Ethereumuau pogledurastamreže PribližavaSe Solani.

Claude Predviéada bi cijena ada-e mogla porasti na na 2,05 Dolara Do Kraja Godineštobiznačilo Višenego trostrukopovećanje u odnosu na tenutnu cijenu od oko 0,6636 Dolara. Određenitehničkiobrasci sugeriraju da bi proboj mogao uslijediti i ranije.

cijena ada-e formirala je Bikovski ObrazacOpadajućgegKlina Koji jeZapočeoKrajem2024. 1,10 DolaraMogućJeNagli Skok Prema 1,50 dolara do kraja ljeta.

ZaDugoročneUlagače, Cardanov Znanstveni Pristup ISnažnadefi Zajednica I daljegačinejednim od najozbiljnijih kandidata za “Ethereum Killera” kakokriptržištesazrijeva.

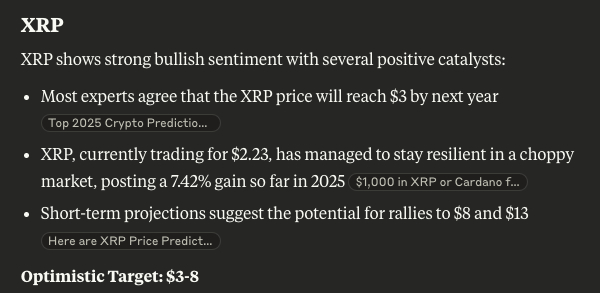

Ripple (XRP): Claude Predviéaveliki proboj za ovu„ otpornu” kriptovalutu

Prema Modelu AI-A Claude,,,,, XRP Token Bi Mogao dosegnuti 13 Dolara U2025. GodiniŠTOBI PETSTAVLJALO SKOKODčak 483% u odnosu na tenutnu cijenu od 2,23 Dolara. ova prognoza temelji se na pozitivnim pravnim pravnim pravnim pravnim pravnim pravnim, svećeminstucionalnomkorištenjute promjenama u Regulatornom okratornomokruženju.

početkomgodine Ujedinjeni Narodi supodržalixrp kao alat za Brza, Transparent Nai Regulativ nousklaéenaglobalnaplaćanjačimeje dodatnopotvrđenanjegovapraktičnavrijednost.

Ripple JetakođerOstvario važnupravnu pobjedu kada je sud presudio da maloprodaja xrp-a nekršimeričkezakone o vrijednosnim papirimačimeje siguranavećapravna sigurnost Za Maleulagače. Iako Još Postojenejasnoćeoko Instituto Cialnih Transakcija, Trends SeJasnoOkrećeU Rippleovu Korist.

UnatočManjim Korekcijama, XRPUživa snažnuPodrškuoko razine od 2 dolaraaunadolazećimmjesecima mogao bi bi testirati granicu od 3 dolara.

ako se ta razina probije, sljedećiciljje 5 dolara. IPAK, SAMO USPOSTAVA sveobuhvatnog gemulatornog okviraOsobito Ako Ga Podupre druga trumpova Administration, Mogla bi xrp pogurati do Projekcija OD 13 Dolara.

Solaxy (Solx): Nova ZvijezdaMeüukripto Presale Projektima

Dok Claudeova Analiza dominira poznatim tokenima poput ethereuma i xrp-a, mnogi iskusni Investitori usmjeravajupažnjuprema prema PresaletržištuU Potrazi Za Ranim Projektima S Velikim Potencijalom Rasta. Jedan odNajzapaženijihMeowu njima tenutno je Solaxy ($SOLX).

Solaxy Ima Ambiciju Redefinirati Industriju Digitalne Ekonomije Integracijom umjetne inteligencije i go to the center,s naglaskom na pametno upravljanje energijom i digitalnimsadržajem. cilj projekta jeomogućitikorisnicima potpunu kontrolu nad podacima i digitalnim interakcijamaBez Potrebe Za Posrednicima.

usredištusolaxy ekosustava nalazi se Premium Platforma dostupna samo vlasnicima $ solx tokenaKoja Nudi Pristup Ekskluzivnim ai Alatima, sadržajimaimogućnostimapersonizirane interakcije i automatizacije.

odpočetkapresalea, Solaxy Je prikupioZnačajnaUlaganjatenutna cijena tokena iznosi 0,05575 USD – čimese otvara attraktivna ulaznatočkaZaraneulagače.

vlasnici $ solx tokena moguotključatidodatne funkcionalnosti na platformmi, uključujućii sudjelovanje u staking programu s fiksnimgodišnjimprinosom od 20% (APY)dostupan putemslužbene Solaxy Web Stranice.

ZaVišeInformacija o Projektu, posjeti solary.ioili prati solary na Xu (BivšiTwitter) I Telegramu.

Editing process For Bitcoin experts, focus on thorough research, accurate and impartial content. We adhere to strict procurement standards and each page is diligently evaluated by our top technical experts and experienced editorial team. This process ensures the integrity, relevance and value of our content to our readers.