Build the coding implementation of AI agents with real-time Python execution and automatic verification

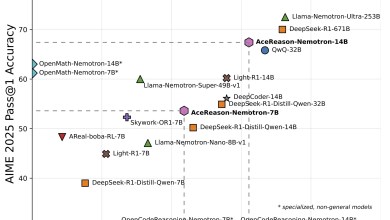

In this tutorial, we will discover how to leverage the power of advanced AI agents and enhance the execution and results validation capabilities of Python to solve complex computing tasks. By integrating Langchain’s React Agent framework with Anthropic’s Claude API, we built an end-to-end solution to generate Python code and perform real-time execution, capture its output, maintain execution status and automatically validate results for expected properties or test cases. This seamless loop of “write → run → validation” allows you to develop powerful analytics, algorithms and simple ML pipelines with confidence at every step.

!pip install langchain langchain-anthropic langchain-core anthropicWe installed the core Lanchain framework as well as anthropomorphic integration and its core utilities to ensure you use agent orchestration tools (Langchain, Langchain-Core) and Claude-specific bindings (Langchain-Manthropic, Anthropic) in your environment.

import os

from langchain.agents import create_react_agent, AgentExecutor

from langchain.tools import Tool

from langchain_core.prompts import PromptTemplate

from langchain_anthropic import ChatAnthropic

import sys

import io

import re

import json

from typing import Dict, Any, ListWe bring together everything you need to build a reactive style agent: OS access to environment variables, Langchain’s proxy constructor (Create_ReaCt_Agent, AgentExecutor) and tool classes for defining custom operations, timely networks for making thought chains, and anthropomorphic chathanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanthanth Standard Python modules (SYS, IO, RE, JSON) handle I/O capture, regular expressions, and serialization, while typing provides type hints to provide clearer, more maintainable code.

class PythonREPLTool:

def __init__(self):

self.globals_dict = {

'__builtins__': __builtins__,

'json': json,

're': re

}

self.locals_dict = {}

self.execution_history = []

def run(self, code: str) -> str:

try:

old_stdout = sys.stdout

old_stderr = sys.stderr

sys.stdout = captured_output = io.StringIO()

sys.stderr = captured_error = io.StringIO()

execution_result = None

try:

result = eval(code, self.globals_dict, self.locals_dict)

execution_result = result

if result is not None:

print(result)

except SyntaxError:

exec(code, self.globals_dict, self.locals_dict)

output = captured_output.getvalue()

error_output = captured_error.getvalue()

sys.stdout = old_stdout

sys.stderr = old_stderr

self.execution_history.append({

'code': code,

'output': output,

'result': execution_result,

'error': error_output

})

response = f"**Code Executed:**n```pythonn{code}n```nn"

if error_output:

response += f"**Errors/Warnings:**n{error_output}nn"

response += f"**Output:**n{output if output.strip() else 'No console output'}"

if execution_result is not None and not output.strip():

response += f"n**Return Value:** {execution_result}"

return response

except Exception as e:

sys.stdout = old_stdout

sys.stderr = old_stderr

error_info = f"**Code Executed:**n```pythonn{code}n```nn**Runtime Error:**n{str(e)}n**Error Type:** {type(e).__name__}"

self.execution_history.append({

'code': code,

'output': '',

'result': None,

'error': str(e)

})

return error_info

def get_execution_history(self) -> List[Dict[str, Any]]:

return self.execution_history

def clear_history(self):

self.execution_history = []This PythonRepltool encapsulates an internal procedure of a state python repl: it captures and executes arbitrary code (evaluation expressions or run statements), redirects stdout/stderr to record outputs and errors, and maintains the history of each execution. Returns a summary of the format, including executed code, any console output or error, and return values, to provide transparent, repeatable feedback for each fragment run in our agent.

class ResultValidator:

def __init__(self, python_repl: PythonREPLTool):

self.python_repl = python_repl

def validate_mathematical_result(self, description: str, expected_properties: Dict[str, Any]) -> str:

"""Validate mathematical computations"""

validation_code = f"""

# Validation for: {description}

validation_results = {{}}

# Get the last execution results

history = {self.python_repl.execution_history}

if history:

last_execution = history[-1]

print(f"Last execution output: {{last_execution['output']}}")

# Extract numbers from the output

import re

numbers = re.findall(r'd+(?:.d+)?', last_execution['output'])

if numbers:

numbers = [float(n) for n in numbers]

validation_results['extracted_numbers'] = numbers

# Validate expected properties

for prop, expected_value in {expected_properties}.items():

if prop == 'count':

actual_count = len(numbers)

validation_results[f'count_check'] = actual_count == expected_value

print(f"Count validation: Expected {{expected_value}}, Got {{actual_count}}")

elif prop == 'max_value':

if numbers:

max_val = max(numbers)

validation_results[f'max_check'] = max_val = expected_value

print(f"Min value validation: {{min_val}} >= {{expected_value}} = {{min_val >= expected_value}}")

elif prop == 'sum_range':

if numbers:

total = sum(numbers)

min_sum, max_sum = expected_value

validation_results[f'sum_check'] = min_sum str:

"""Validate data analysis results"""

validation_code = f"""

# Data Analysis Validation for: {description}

validation_results = {{}}

# Check if required variables exist in global scope

required_vars = {list(expected_structure.keys())}

existing_vars = []

for var_name in required_vars:

if var_name in globals():

existing_vars.append(var_name)

var_value = globals()[var_name]

validation_results[f'{{var_name}}_exists'] = True

validation_results[f'{{var_name}}_type'] = type(var_value).__name__

# Type-specific validations

if isinstance(var_value, (list, tuple)):

validation_results[f'{{var_name}}_length'] = len(var_value)

elif isinstance(var_value, dict):

validation_results[f'{{var_name}}_keys'] = list(var_value.keys())

elif isinstance(var_value, (int, float)):

validation_results[f'{{var_name}}_value'] = var_value

print(f"✓ Variable '{{var_name}}' found: {{type(var_value).__name__}} = {{var_value}}")

else:

validation_results[f'{{var_name}}_exists'] = False

print(f"✗ Variable '{{var_name}}' not found")

print(f"nFound {{len(existing_vars)}}/{{len(required_vars)}} required variables")

# Additional structure validation

for var_name, expected_type in {expected_structure}.items():

if var_name in globals():

actual_type = type(globals()[var_name]).__name__

validation_results[f'{{var_name}}_type_match'] = actual_type == expected_type

print(f"Type check '{{var_name}}': Expected {{expected_type}}, Got {{actual_type}}")

validation_results

"""

return self.python_repl.run(validation_code)

def validate_algorithm_correctness(self, description: str, test_cases: List[Dict[str, Any]]) -> str:

"""Validate algorithm implementations with test cases"""

validation_code = f"""

# Algorithm Validation for: {description}

validation_results = {{}}

test_results = []

test_cases = {test_cases}

for i, test_case in enumerate(test_cases):

test_name = test_case.get('name', f'Test {{i+1}}')

input_val = test_case.get('input')

expected = test_case.get('expected')

function_name = test_case.get('function')

print(f"nRunning {{test_name}}:")

print(f"Input: {{input_val}}")

print(f"Expected: {{expected}}")

try:

if function_name and function_name in globals():

func = globals()[function_name]

if callable(func):

if isinstance(input_val, (list, tuple)):

result = func(*input_val)

else:

result = func(input_val)

passed = result == expected

test_results.append({{

'test_name': test_name,

'input': input_val,

'expected': expected,

'actual': result,

'passed': passed

}})

status = "✓ PASS" if passed else "✗ FAIL"

print(f"Actual: {{result}}")

print(f"Status: {{status}}")

else:

print(f"✗ ERROR: '{{function_name}}' is not callable")

else:

print(f"✗ ERROR: Function '{{function_name}}' not found")

except Exception as e:

print(f"✗ ERROR: {{str(e)}}")

test_results.append({{

'test_name': test_name,

'error': str(e),

'passed': False

}})

# Summary

passed_tests = sum(1 for test in test_results if test.get('passed', False))

total_tests = len(test_results)

validation_results['tests_passed'] = passed_tests

validation_results['total_tests'] = total_tests

validation_results['success_rate'] = passed_tests / total_tests if total_tests > 0 else 0

print(f"n=== VALIDATION SUMMARY ===")

print(f"Tests passed: {{passed_tests}}/{{total_tests}}")

print(f"Success rate: {{validation_results['success_rate']:.1%}}")

test_results

"""

return self.python_repl.run(validation_code)This result Validator class is built on Pythonrepltool to automatically generate and run custom verification routines, check numeric properties, verify data structures, or run algorithm test cases against proxy execution history. Issue Python snippets of extracted output, compare them to the expected criteria, and summarize the pass/fail results to close the loop on “Execution → Verification” in our proxy workflow.

python_repl = PythonREPLTool()

validator = ResultValidator(python_repl)

Here we instantiate our interactive Python Repl Tool (Python_repl) and then create a Result Validator bound to the same REPL instance. This wiring ensures that any code you execute can be used immediately for automatic verification steps, closing the loop of execution and correctness checks.

python_tool = Tool(

name="python_repl",

description="Execute Python code and return both the code and its output. Maintains state between executions.",

func=python_repl.run

)

validation_tool = Tool(

name="result_validator",

description="Validate the results of previous computations with specific test cases and expected properties.",

func=lambda query: validator.validate_mathematical_result(query, {})

)

Here we wrap the repl and verification methods into the Lanchain tool object and assign them to their clear names and descriptions. The proxy can call python_repl to run the code and result_validator to automatically check the last execution based on the specified criteria.

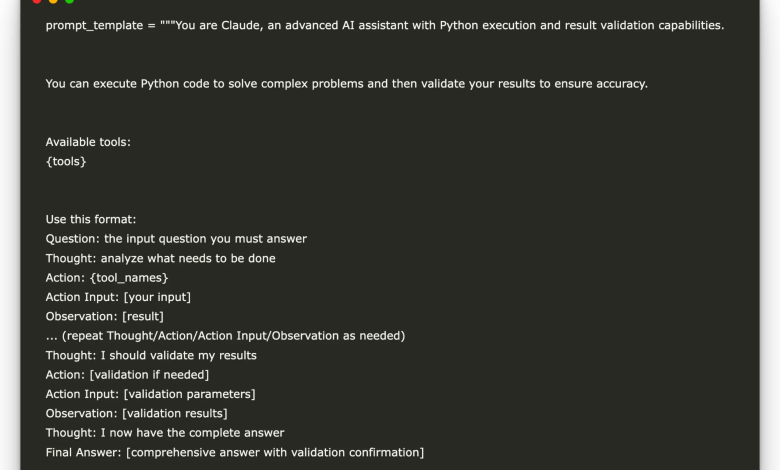

prompt_template = """You are Claude, an advanced AI assistant with Python execution and result validation capabilities.

You can execute Python code to solve complex problems and then validate your results to ensure accuracy.

Available tools:

{tools}

Use this format:

Question: the input question you must answer

Thought: analyze what needs to be done

Action: {tool_names}

Action Input: [your input]

Observation: [result]

... (repeat Thought/Action/Action Input/Observation as needed)

Thought: I should validate my results

Action: [validation if needed]

Action Input: [validation parameters]

Observation: [validation results]

Thought: I now have the complete answer

Final Answer: [comprehensive answer with validation confirmation]

Question: {input}

{agent_scratchpad}"""

prompt = PromptTemplate(

template=prompt_template,

input_variables=["input", "agent_scratchpad"],

partial_variables={

"tools": "python_repl - Execute Python codenresult_validator - Validate computation results",

"tool_names": "python_repl, result_validator"

}

)

The above prompt template uses Claude as a dual capability assistant (“thought”), selects run code and checks output from the Python_repl and Result_validator tools, and then iterates until the solution with validation is available. By defining a clear chain structure with placeholders and its usage examples, it can guide the agent: (1) decompose the problem, (2) call python_repl to execute the necessary code, (3) call result_validator to confirm the correctness, and finally (4) (4) (4) (4) provides a self-checking “final answer”. This scaffolding ensures a disciplined “write → run → verification” workflow.

class AdvancedClaudeCodeAgent:

def __init__(self, anthropic_api_key=None):

if anthropic_api_key:

os.environ["ANTHROPIC_API_KEY"] = anthropic_api_key

self.llm = ChatAnthropic(

model="claude-3-opus-20240229",

temperature=0,

max_tokens=4000

)

self.agent = create_react_agent(

llm=self.llm,

tools=[python_tool, validation_tool],

prompt=prompt

)

self.agent_executor = AgentExecutor(

agent=self.agent,

tools=[python_tool, validation_tool],

verbose=True,

handle_parsing_errors=True,

max_iterations=8,

return_intermediate_steps=True

)

self.python_repl = python_repl

self.validator = validator

def run(self, query: str) -> str:

try:

result = self.agent_executor.invoke({"input": query})

return result["output"]

except Exception as e:

return f"Error: {str(e)}"

def validate_last_result(self, description: str, validation_params: Dict[str, Any]) -> str:

"""Manually validate the last computation result"""

if 'test_cases' in validation_params:

return self.validator.validate_algorithm_correctness(description, validation_params['test_cases'])

elif 'expected_structure' in validation_params:

return self.validator.validate_data_analysis(description, validation_params['expected_structure'])

else:

return self.validator.validate_mathematical_result(description, validation_params)

def get_execution_summary(self) -> Dict[str, Any]:

"""Get summary of all executions"""

history = self.python_repl.get_execution_history()

return {

'total_executions': len(history),

'successful_executions': len([h for h in history if not h['error']]),

'failed_executions': len([h for h in history if h['error']]),

'execution_details': history

}

This AdvancedClaudeCodeagent class wraps everything in an easy-to-use interface: it is configured with anthropomorphic Claude client (using your API keys), uses our python_repl and result_validator tools and custom prompt rooms and custom prompts, and sets up executors to drive the iterative “thinking” → “code → code → code → coper”. Its run() method allows you to submit a natural language query and return Claude’s final self-check answer; validate_last_result() exposes a manual hook for additional checks; get_execution_summary() provides a concise report on every snippet you execute (how many successes, failures, and their details).

if __name__ == "__main__":

API_KEY = "Use Your Own Key Here"

agent = AdvancedClaudeCodeAgent(anthropic_api_key=API_KEY)

print("🚀 Advanced Claude Code Agent with Validation")

print("=" * 60)

print("n🔢 Example 1: Prime Number Analysis with Twin Prime Detection")

print("-" * 60)

query1 = """

Find all prime numbers between 1 and 200, then:

1. Calculate their sum

2. Find all twin prime pairs (primes that differ by 2)

3. Calculate the average gap between consecutive primes

4. Identify the largest prime gap in this range

After computation, validate that we found the correct number of primes and that all identified numbers are actually prime.

"""

result1 = agent.run(query1)

print(result1)

print("n" + "=" * 80 + "n")

print("📊 Example 2: Advanced Sales Data Analysis with Statistical Validation")

print("-" * 60)

query2 = """

Create a comprehensive sales analysis:

1. Generate sales data for 12 products across 24 months with realistic seasonal patterns

2. Calculate monthly growth rates, yearly totals, and trend analysis

3. Identify top 3 performing products and worst 3 performing products

4. Perform correlation analysis between different products

5. Create summary statistics (mean, median, standard deviation, percentiles)

After analysis, validate the data structure, ensure all calculations are mathematically correct, and verify the statistical measures.

"""

result2 = agent.run(query2)

print(result2)

print("n" + "=" * 80 + "n")

print("⚙️ Example 3: Advanced Algorithm Implementation with Test Suite")

print("-" * 60)

query3 = """

Implement and validate a comprehensive sorting and searching system:

1. Implement quicksort, mergesort, and binary search algorithms

2. Create test data with various edge cases (empty lists, single elements, duplicates, sorted/reverse sorted)

3. Benchmark the performance of different sorting algorithms

4. Implement a function to find the kth largest element using different approaches

5. Test all implementations with comprehensive test cases including edge cases

After implementation, validate each algorithm with multiple test cases to ensure correctness.

"""

result3 = agent.run(query3)

print(result3)

print("n" + "=" * 80 + "n")

print("🤖 Example 4: Machine Learning Model with Cross-Validation")

print("-" * 60)

query4 = """

Build a complete machine learning pipeline:

1. Generate a synthetic dataset with features and target variable (classification problem)

2. Implement data preprocessing (normalization, feature scaling)

3. Implement a simple linear classifier from scratch (gradient descent)

4. Split data into train/validation/test sets

5. Train the model and evaluate performance (accuracy, precision, recall)

6. Implement k-fold cross-validation

7. Compare results with different hyperparameters

Validate the entire pipeline by ensuring mathematical correctness of gradient descent, proper data splitting, and realistic performance metrics.

"""

result4 = agent.run(query4)

print(result4)

print("n" + "=" * 80 + "n")

print("📋 Execution Summary")

print("-" * 60)

summary = agent.get_execution_summary()

print(f"Total code executions: {summary['total_executions']}")

print(f"Successful executions: {summary['successful_executions']}")

print(f"Failed executions: {summary['failed_executions']}")

if summary['failed_executions'] > 0:

print("nFailed executions details:")

for i, execution in enumerate(summary['execution_details']):

if execution['error']:

print(f" {i+1}. Error: {execution['error']}")

print(f"nSuccess rate: {(summary['successful_executions']/summary['total_executions']*100):.1f}%")Finally, we instantiate the AdvanceClaudeCodeagent using your anthropomorphic API key, run four illustrative example queries (covering Prime-Number analysis, sales data analysis, algorithm implementation, and a simple ML pipeline), and print the results for each validation. Finally, it collects and displays concise execution summary, total runs, successes, failures, and error details to demonstrate the agent’s real-time “write → run → validation” workflow.

In short, we have developed a versatile advanced claudecodeagent that seamlessly enables generational inference with precise computational control. Essentially, this broker not only drafted Python snippets. It runs them on the spot and checks their correctness based on your specified conditions, and automatically closes the feedback loop. Whether you are doing raw analysis, statistical evaluation, algorithm benchmarking, or end-to-end ML workflow, this pattern ensures reliability and repeatability.

View notebooks on Github. All credits for this study are to the researchers on the project. Also, please feel free to follow us twitter And don’t forget to join us 95k+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.