Can LLM really be judged by reasoning? Microsoft and Tsinghua researchers introduce reward inference models to dynamically extend test time calculations for better alignment

Using supervised signals (RLVR) of human feedback (RLHF) or verifiable reward (RLVR), enhanced learning (RL) has become the basic approach to LLM after LLM training. Although RLVR shows promise in mathematical reasoning, it faces significant limitations due to its dependence on training queries and verifiable answers. This requirement limits the application to large-scale training of common domain queries, where it proves tricky. Furthermore, the current reward model is classified as scalar and generation types, and the test time calculation cannot be effectively extended for reward estimation. Existing methods apply unified computing resources across all inputs, lack adaptability and cannot allocate other resources to challenging queries that require subtle analysis.

Developing strategies and scoring schemes are characteristics of the reward model. The numerical method assigns scalar fractions to the query response pairs, while the generation method generates natural language feedback. Scores follow a discriminatory comparison of absolute assessments or candidate responses for individual pairs. Generative reward models consistent with the LLM-AS-A-Gudge paradigm provide interpretable feedback, but face reliability issues due to biased judgments. The inference time scaling method dynamically adjusts computing resources, including parallel strategies such as parallel strategies for extending inference trajectories and horizon-based scaling. However, they lack systematic adaptation to input complexity, limiting their effectiveness in various query types.

Researchers at Microsoft Research, Tsinghua University, and Peking University proposed the Reward Inference Model (RRMS), which performs clear reasoning before generating the final reward. This inference phase allows RRMS to adaptively allocate other computing resources when evaluating responses to complex tasks. RRMS introduces a dimension that enhances reward modeling by extending test time calculations while maintaining general applicability across various evaluation schemes. Through thoughtful reasoning, RRMS uses other test time calculations to perform complex queries without immediate obviousness, without immediate vigor. This encourages RRMS to self-evolve and reward reasoning abilities without explicit traces of reasoning as training data.

RRMS uses the transformer-type main chain to use the QWEN2 model, and completes reward modeling as text, where rrms are automatically re-collected to generate the thinking process, and then make the final judgment. Each input contains one query and two responses to determine preferences without allowing tie. The researchers used the RewardBench repository to guide systematic analysis across evaluation criteria, including guidance on fidelity, helpiness, accuracy, harmlessness and level of detail. RRMS supports multi-response assessments through ELO rating systems and knockouts, both of which can be voted with most votes to enhance the calculation utilization when testing. This is a number of samples of RRMS for pairwise comparisons, and a majority vote is made to obtain strong comparison results.

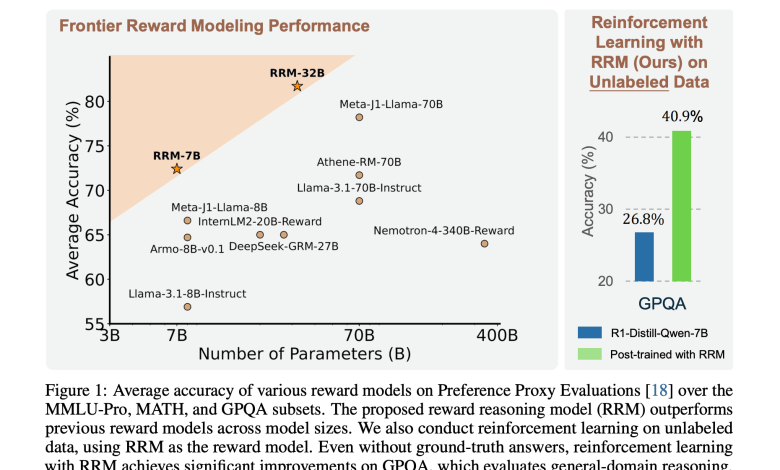

The evaluation results show that RRMS implements a strong benchmark on the reward board and Pandalm test benchmarks, with RRM-32B reaching 98.6% accuracy in the inference category. Comparing with a direct judge model trained on the same data reveals a huge performance gap, showing that RRM effectively uses test time calculations for complex queries. In the best reasoning for reward guidance, RRMS exceeds all baseline models without additional test time calculations, and most votes can be substantially improved in the subset of evaluations. Post-training experiments showed improved stable downstream performance of MMLU-PRO and GPQA. Scaling experiments across 7B, 14B and 32B models prove that a longer thinking vision always improves accuracy.

In summary, the researchers introduced that RRMS performs a clear reasoning process before reward allocation to address computational rigidity in existing reward modeling methods. Rule-based reward RL enables RRM to develop complex inference functions without explicit inference traces as supervision. RRMS effectively utilizes test time calculation through parallel and sequential scaling methods. The effectiveness of RRM in practical applications, including the best N inferences for reward guidance and post-training feedback, demonstrates their potential as a powerful alternative to traditional scalar reward models in alignment techniques.

View paper and model on hugging face. All credits for this study are to the researchers on the project. Also, please stay tuned for us twitter And don’t forget to join us 95k+ ml reddit And subscribe Our newsletter.

Sajjad Ansari is a final year undergraduate student from IIT Kharagpur. As a technology enthusiast, he delves into the practical application of AI, focusing on understanding AI technology and its real-world impact. He aims to express complex AI concepts in a clear and easy way.