NVIDIA AI introduces AceReason Nemotron to advance mathematics and code reasoning through reinforcement learning

Reasoning ability represents the basic component of AI systems. The introduction of Openai O1 has sparked significant interest in building inference models through large-scale reinforcement learning (RL) approach. While the open source of DeepSeek-R1 enables the community to develop the latest inference model, the original report omits key technical details, including data strategies and specific RL training recipes. This absence allowed researchers to replicate successfully, leading to fragmented efforts to explore different model sizes, initial checkpoints, and target domains. Different model sizes, initial checkpoints, distillation inference models, target domains, codes and physical AI were explored, but there was a lack of conclusive or consistent training formulas.

Through preprocessing and supervised fine-tuning methods, the language model of training inference focuses on the mathematical and code fields. Early RL attempts using domain-specific reward models show limited benefits due to inherent challenges to mathematical and coding tasks. The latest efforts after the release of DeepSeek-R1 explore rules-based verification methods, where mathematical problems require specific output formats to be accurately verified, and code problems utilize compilation and execution feedback. However, these approaches focus on individual domains rather than dealing with heterogeneous cues, limiting to benchmark evaluations of AIME and LIVECODEBENCH, and training instability issues, requiring techniques such as incremental response length increase and aggressive collapse mitigation measures.

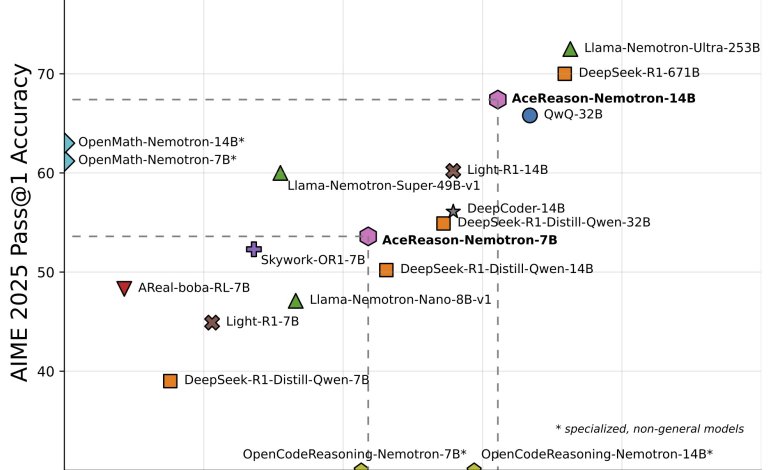

Researchers from NVIDIA show that large-scale RL can significantly enhance the inference ability of powerful and medium-sized models, performing better than state-of-the-art distillation methods. This method adopts a simple and effective sequential training strategy: first RL training is performed with mathematical prompts only, followed by code-only prompts. This suggests that mathematical RL alone enhances performance on mathematical benchmarks and improves code reasoning tasks, while extending code-only RL iterations further improves code performance with minimal reduction in mathematical results. Additionally, a powerful data planning pipeline is developed to collect challenging tips with high-quality, verifiable answers and test cases, so that validated RL can be based on both domains.

This method performs data planning for mathematical and code-only RL. For mathematical RL only, the pipeline merges the DeepScaler and Numinamath datasets, covering algebra, combinatorial, numerical theory and geometry, applying 9-gram filtering and strict inappropriate exclusion rules. The DeepSeek-R1 model verified the problem with eight attempts, retaining the correct solution for majority votes only through rule-based validation. Using only function calls and stdin/stdout formats for algorithm topics, a code-only RL dataset is curated using a modern competitive programming platform. Additionally, the researchers filtered incompatibility issues, curated comprehensive test cases covering edge cases, and assigned difficulty scores using DeepSeek-R1-671b evaluation, resulting in 8,520 validated coding issues.

The results show that compared with the initial SFT model, the AceReason-Nemotron-7B model achieved 14.5% and 14.6% accuracy improvements on AIME 2024/2025, respectively, and 14.2% and 8% growth on LiveCodeBench V5/V6. The 14B variant performs better than larger models, such as DeepSeek-R1-Distill-Qwen-32b and DeepSeek-R1-Distill-Lalama-70B, achieving first-class results in the RL-based open inference model. Compared with the SOTA distillation-based model, AceReason Nemotron-14B is better than 2.1%/4.4% on the AIME benchmark and OpenCodeSoseasoning-14B, with more competitive performance on the liveCodeBench than the distillation competition, indicating higher competitive performance on the liveCodeBench. O3 Mini.

In this article, researchers show that large-scale RL enhances the inference ability of powerful small and medium-sized SFT models through sequentially specific domain training. The proposed method of executing mathematical RL only, followed by the prompt of code-only prompts, shows that mathematical reasoning training can significantly improve the performance of mathematical and coding benchmarks. The data curation pipeline collects challenging tips by collecting high-quality, verifiable answers and test cases, thus enabling verification-based RLs to cross heterogeneous domains. The research results show that RL drives model inference limitations, provides solutions to problems that cannot be solved, and establishes new performance benchmarks for inference model development.

View paper and model on hugging face. All credits for this study are to the researchers on the project. Also, please feel free to follow us twitter And don’t forget to join us 95k+ ml reddit And subscribe Our newsletter.

Sajjad Ansari is a final year undergraduate student from IIT Kharagpur. As a technology enthusiast, he delves into the practical application of AI, focusing on understanding AI technology and its real-world impact. He aims to express complex AI concepts in a clear and easy way.