QWEN researchers propose Qwenlong-L1: an enhanced learning framework for long-form cultural reasoning in large language models

Although large reasoning models (LRMS) show impressive short-story reasoning abilities through reinforcement learning (RL), these benefits do not generalize well to novel solutions. Applications such as multi-file quality checks, research synthesis, and legal or financial analysis require models to process sequences of over 100K tokens. However, RL optimization in such systems is subject to slower reward convergence, unstable policy updates due to differential fluctuations in KL and reduced exploration resulting from entropy collapse. These bottlenecks reveal the basic gap in transitioning LRM from short story capabilities to long-form cultural generalization.

Qwenlong-L1: Structured RL framework for long-form cultural acculturation

To address these limitations, the QWEN research team introduced Qwenlong-L1This is a novel RL framework designed to adapt LRMS to novel reasoning tasks. The framework is divided into three key stages:

- Warm-up supervision fine-tuning (SFT): Through training carefully planned questions – text – answer triplets, a stable initialization of the policy model is provided, thus ensuring basic capabilities in context understanding and answer extraction.

- Course-guided phased enhanced learning: A phased training process is introduced and the context length is gradually increased. This advancement allows models to gradually acquire novel reasoning behavior without disrupting strategy updates.

- Uncomprehensible retrospective sampling: Improve exploration by maintaining and repeating the difficult instances of previous stages, weighting from their difficulty to encourage deeper reasoning and robustness of various inputs.

These stages complement the hybrid reward mechanism – rules-based exact match validation combined with semantic evaluation of lightweight LLMs can achieve accuracy and recall during policy training.

Technical design and methodological advantages

Qwenlong-L1 integrates the latest progress in group matching RL optimization, especially grpo and DAPOto reduce the computational overhead associated with novel value estimation:

- grpo Estimate the advantages by normalizing rewards in the sampling group, thereby eliminating the need for individual value networks and encouraging diversified generative patterns.

- DAPO Mechanisms such as dynamic sampling, ultra-long punishment, and asymmetric shear thresholds are combined to prevent entropy collapse and mitigate length bias during training.

The reward function is defined as the maximum signal of two signals: matching based on deterministic rules and semantic judgments from compact evaluator models (e.g. qwen2.5-1.5b). This hybrid approach avoids overfitting rigid formats while keeping the answers between various symbols and wording correct.

In addition, the framework is Progressive context scalingwhere the RL process transitions from 20k token to 60k input lengths in the controlled phase, stabilizing training dynamics and promoting policy generalization.

Experimental results and benchmark performance

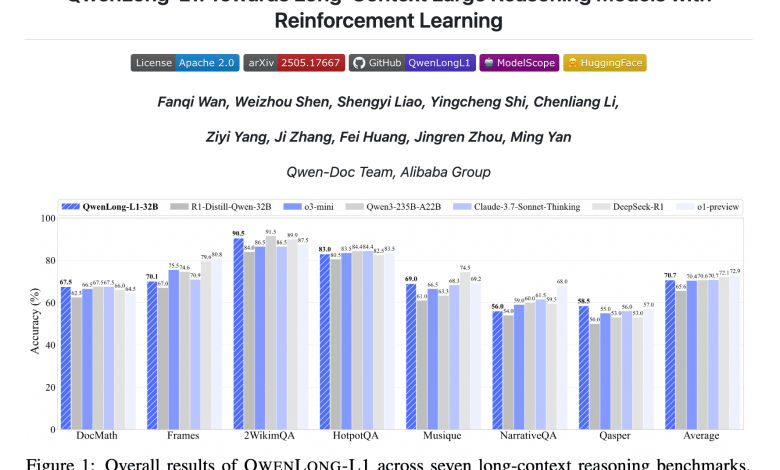

Qwenlong-L1 was evaluated in seven long documented QA benchmarks including Docmath, Framames, 2Wikimultihopqa, HotPotQA, Musique, NornativeQa, and Qasper. 32B variant, Qwenlong-L1-32Bshowing strong empirical performance:

- It performs better than baseline models, e.g. R1-DISTILL-QWEN-32B go through 5.1 points And surpass the leading proprietary systems Openai-O3-Mini and QWEN3-235B-A22B.

- Its performance is Comparing with Claude-3.7-Sonnet thinkingindicating competitive reasoning ability at extreme context lengths.

- Through @k analysis, consistent improvement was obtained through increasing sampling, achieving the second pass 73.7surpass DeepSeek-R1 and OpenAi-O1-preivieweven with a lower sampling rate.

The ablation studies further validate the individual contributions of SFT, phased RL and retrospective sampling. It is worth noting that RL plays a decisive role in implementing emergency reasoning behaviors such as grounding, sub-target environment, verification and backtracking, which is not effectively caused by supervised fine-tuning alone.

in conclusion

Qwenlong-L1 represents a systematic approach that can equip LRMS with strong long-form cultural reasoning capabilities through reinforcement learning. Its design effectively bridges the gap between essay expertise and an information-intensive environment by combining supervised initialization, course-driven context scaling and hybrid evaluation strategies. This framework not only enables the latest results in the benchmarks of long-term benchmarks, but also demonstrates the emergence of interpretable inference patterns during training.

View paper, model on hug surface and github page. All credits for this study are to the researchers on the project. Also, please stay tuned for us twitter And don’t forget to join us 95k+ ml reddit And subscribe Our newsletter.

Asif Razzaq is CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, ASIF is committed to harnessing the potential of artificial intelligence to achieve social benefits. His recent effort is to launch Marktechpost, an artificial intelligence media platform that has an in-depth coverage of machine learning and deep learning news that can sound both technically, both through technical voices and be understood by a wide audience. The platform has over 2 million views per month, demonstrating its popularity among its audience.